Measuring Test Quality: Reliability and Validity

How do you know if the pre-employment test you are using is filtering the right candidates for your organization? In this article we explore how to determine if a test is valid and reliable.

Research shows that almost 85% of today's companies use some form of pre-employment assessment in their hiring procedure. So, there is a high possibility that you are part of an organization that uses tests to hire new employees.

Does that mean you can pick up any test, implement it for your hiring needs, and end up with stellar employees at work? Definitely not. Pre-employment tests need to be vetted to determine their usefulness. To do this, you need to answer two questions:

- How reliable are the scores obtained in the test? - Reliability

- How well can the test predict your potential employee's job performance? - Validity

What is a good pre-employment test?

Before jumping to determine the usefulness of these tests, you need to understand what a pre-employment test is.

What is a pre-employment test?

Pre-employment tests are a standardized way of obtaining data on candidates during hiring. They are used to determine your potential employees' abilities and traits.

For a test to be deemed as "good", it must satisfy three main conditions:

- If your candidate retakes the test, they will get similar scores as their previous attempt.

- If the test claims to measure a particular characteristic, it must measure that characteristic.

- The test is relevant to the job, i.e. it measures a trait essential for the job.

Simply put, a test must be reliable and valid for it to be considered "good". The extent to which a test satisfies these conditions is determined by two properties: Reliability and Validity.

Test Reliability

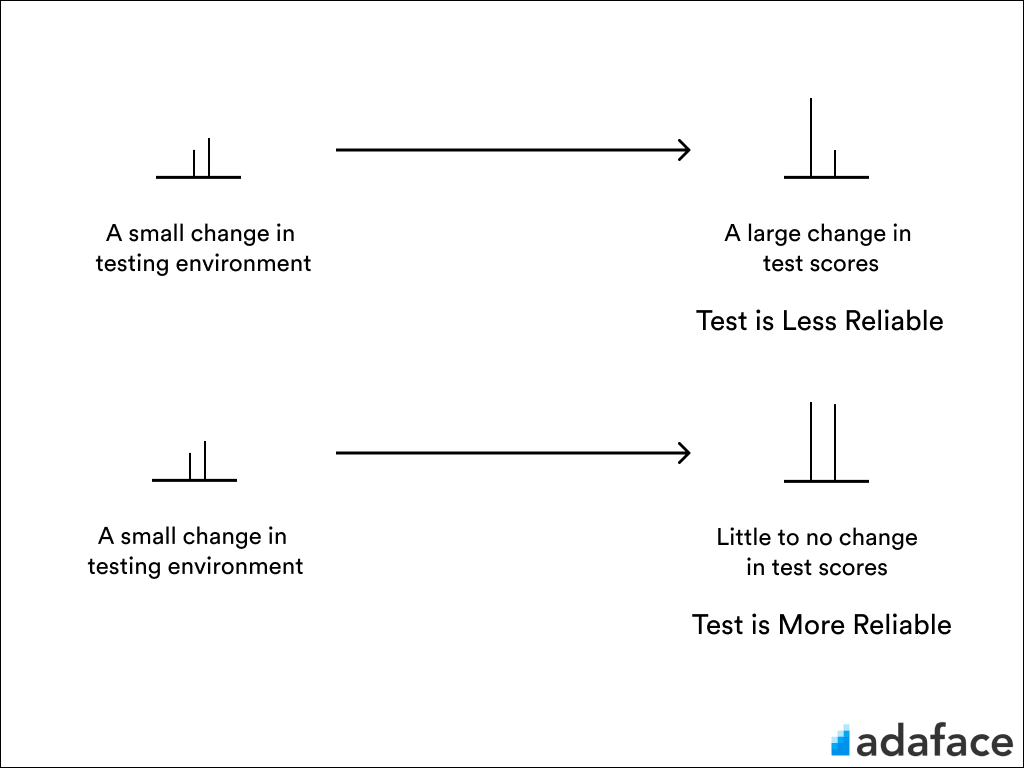

Reliability refers to the extent to which a test consistently measures a characteristic.

Think of reliability in this way: No matter how many times you take the test, you obtain similar scores. If this is true for your pre-employment test, it is considered reliable.

Say your candidate obtains different scores each time they take the test. How do you account for the change? Some possible reasons (errors) are:

- Candidate's physical and mental state: A major league football game the night before the test? They might have stayed up the whole night rooting for their favourite team and had no sleep before the test.

- Environmental factors: Changes in the testing environment, such as lighting, noise, or even the test administrator, can affect your candidate's performance in the test.

- Test form: If your candidate scores differently on online and offline forms of the test, the test is less reliable.

- Rater's judgements: Maybe the only change between the two test attempts is that different raters evaluated it. Different people have different experiences and might analyze the answers differently.

Simply put, the degree to which the test scores remain unaffected by measurement errors is known as the reliability of the test.

Types of Reliability in Assessment

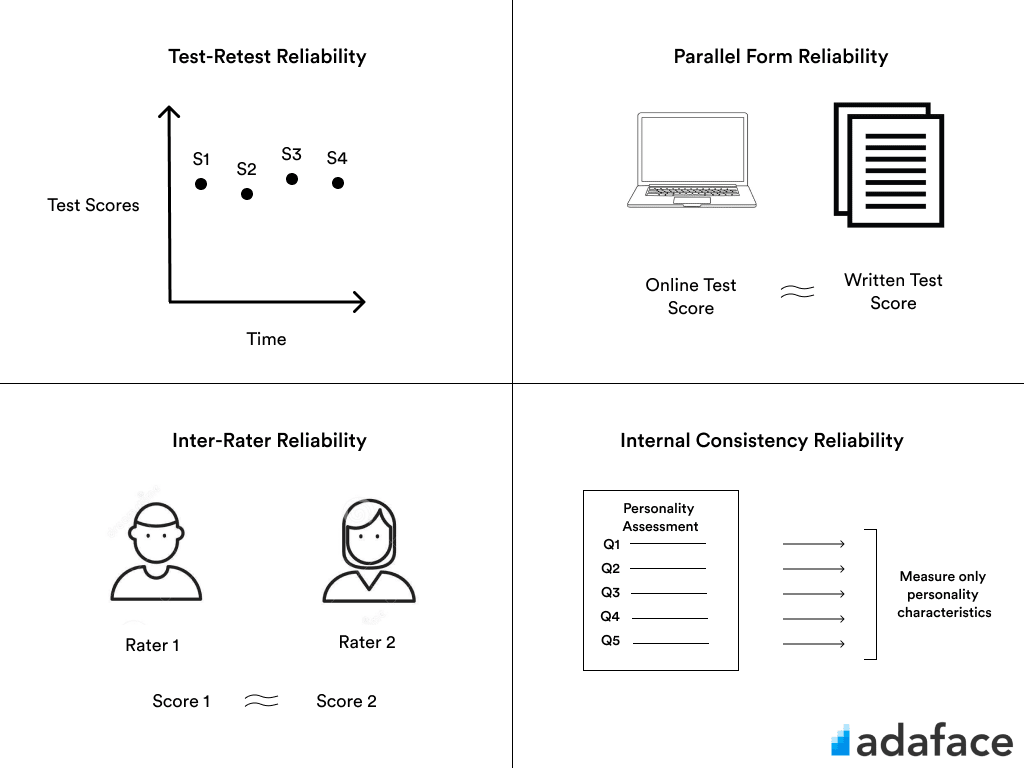

You want to use a pre-employee assessment which is reliable, right? But how do you determine the reliability of a test? There are multiple ways to do that.

- Test-Retest Reliability: Consistency across scores over time.

- Parallel Form Reliability: Consistency across scores for various forms of the test.

- Inter-Rater Reliability: Consistency across scores when rated by different individuals.

- Internal Consistency Reliability: The extent to which items on a test measure the same thing.

Importance of Reliability

You want to choose the test which consistently gives similar scores no matter how many times your candidate takes the test. Using a less reliable test will harm your organization. You end up with inferior employees, a culture mismatch, and an inefficient team.

Interpretation of Reliability

Reliability is quantified using the reliability coefficient (r). Typically higher values of 'r' indicate more reliability. For a test to be usable, you want the coefficient to be higher than 0.7 on a scale of 1. Any test below this benchmark may not have much application in practical workplace scenarios.

Test Validity

Validity refers to what attribute the test measures and how well it measures that attribute.

It gives meaning to your candidate's test scores. If the test that you are using is valid, then the scores which your candidates obtain on the test directly correlate to their job performance.

To consider an employment assessment valid, you want evidence that higher scores on the test mean better job performance. There are multiple ways in which we can obtain this evidence.

Types of Validity in Assessment

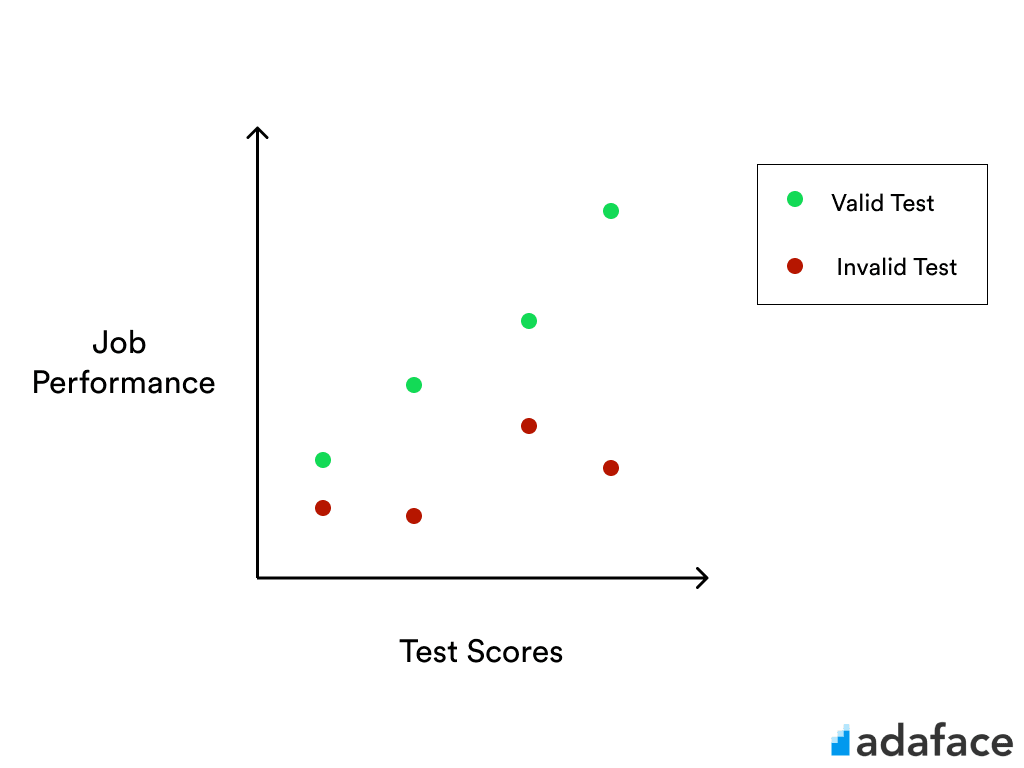

- Criterion-Related Validity requires that a test demonstrates a direct statistical relationship between test scores and job performance (like the one represented by the blue dot in the image above).

- Content-Related Validity requires the content of the test to encompass all the areas of the characteristic it is measuring. That is to say; if you give your candidate a personality test, its content should cover all areas such as Openness, Conscientiousness, Extraversion, Agreeableness and Neuroticism.

- Construct-Related Validity: Say you have been using an employment assessment for quite some time and made many good hiring decisions. This is proof enough that the test is valid. Simply put, if there is enough factual evidence that the test works, it must be valid.

Importance of Validity

Validity is a direct indication of the usefulness of a test. Remember, reliability tells you how accurate the scores are, but validity gives meaning to these scores. Without this, there is no way of correlating your candidate's test scores to their job performance.

Interpretation of Validity

Similar to reliability, to interpret validity, there exists a validity coefficient. Typically, when you consider a single test, a coefficient value greater than 0.35 is known to be very beneficial.

How do Reliability and Validity apply to tests?

Applying these two properties to evaluate the usefulness of tests must be the key takeaway from this post. Picture yourself playing darts. The objective is simple, strike the centre of the board. This is similar to what you are trying to accomplish with a pre-employment test, you want the test to do one thing, and you want it to be done right. Let's consider the three possible scenarios:

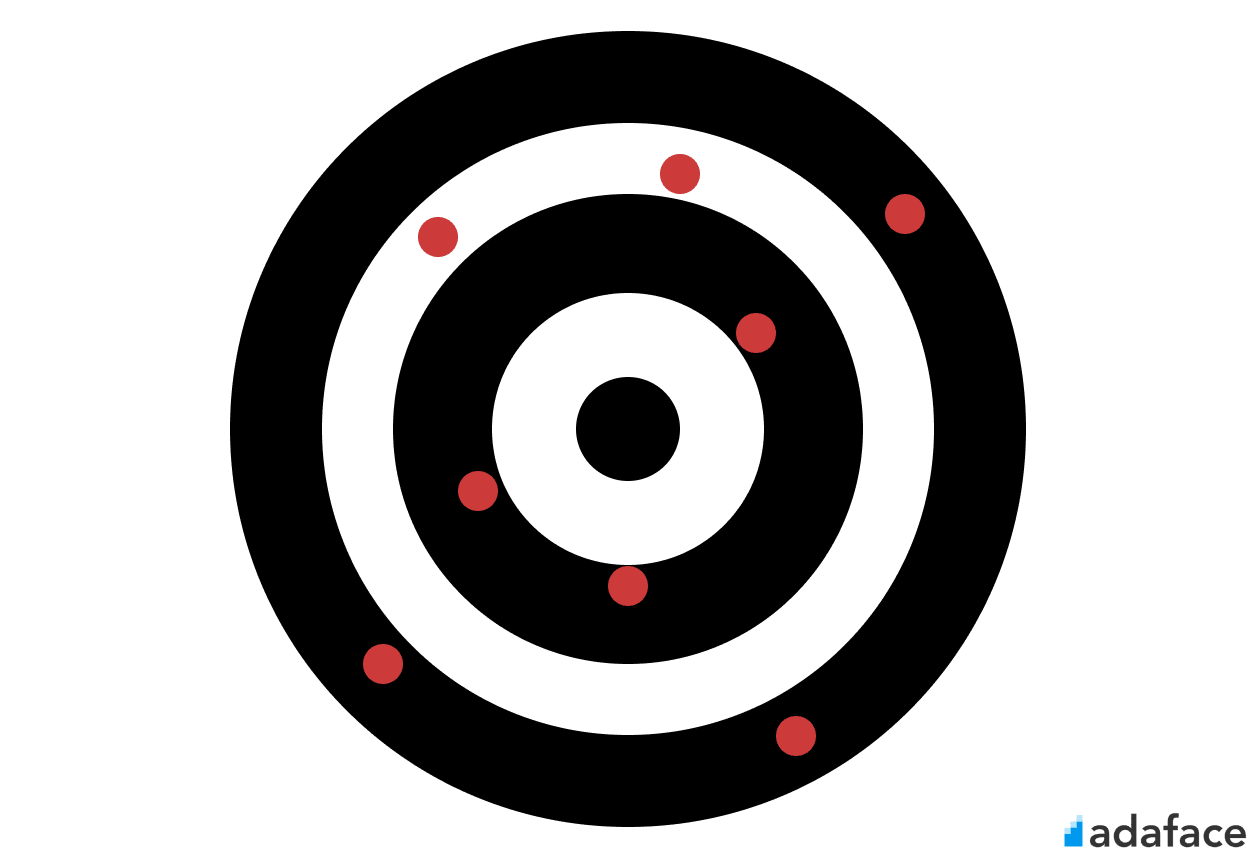

Not reliable, not valid:

Sorry to say, you have had a terrible game. Not only are your shots off target, but none of them consistently strike any point on the board. Similarly, tests that are neither valid nor reliable do not have consistent test scores, nor do they have any relation between the test score and job performance.

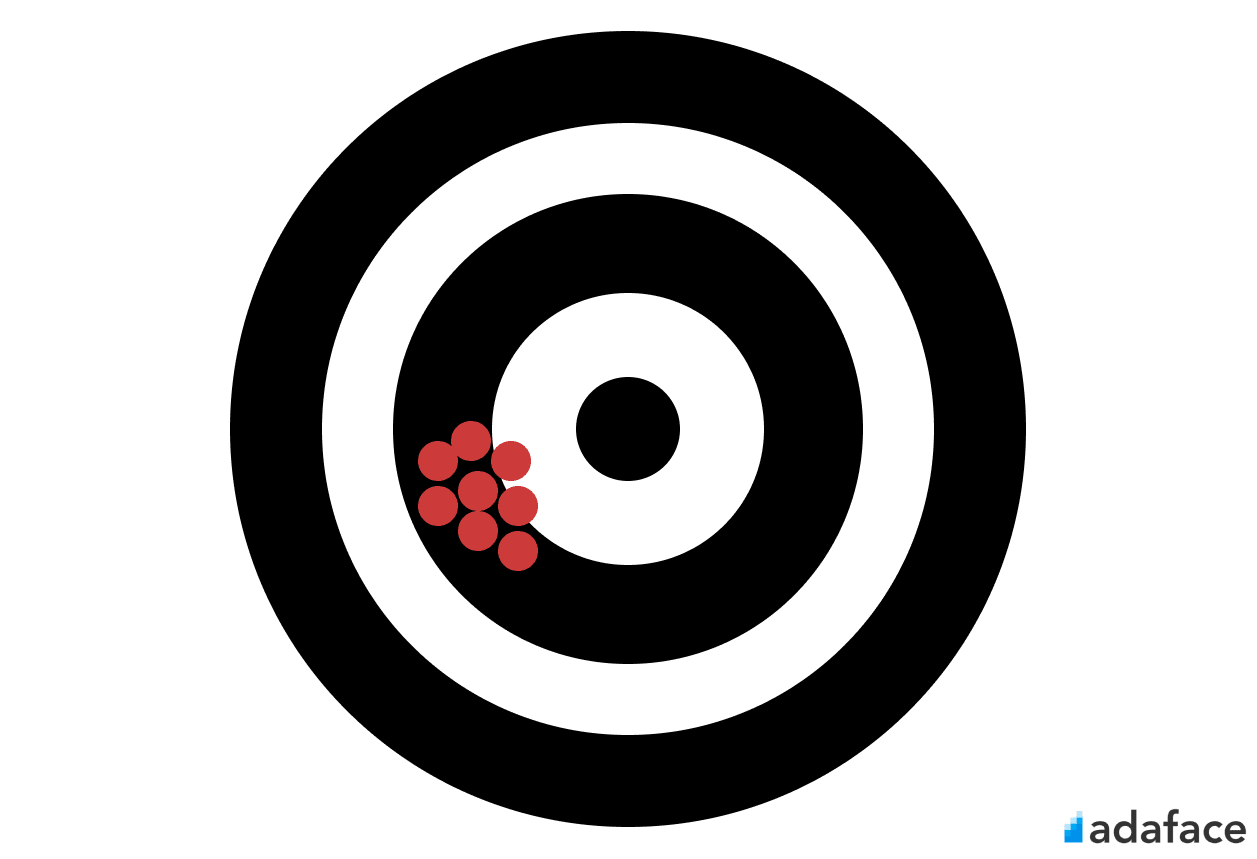

Reliable, but not Valid:

You had a better game than before since you managed to strike near the target consistently, but you still aren't hitting the mark. With reliable tests, the scores are consistent, but with no test validity, the relation with job performance does not exist.

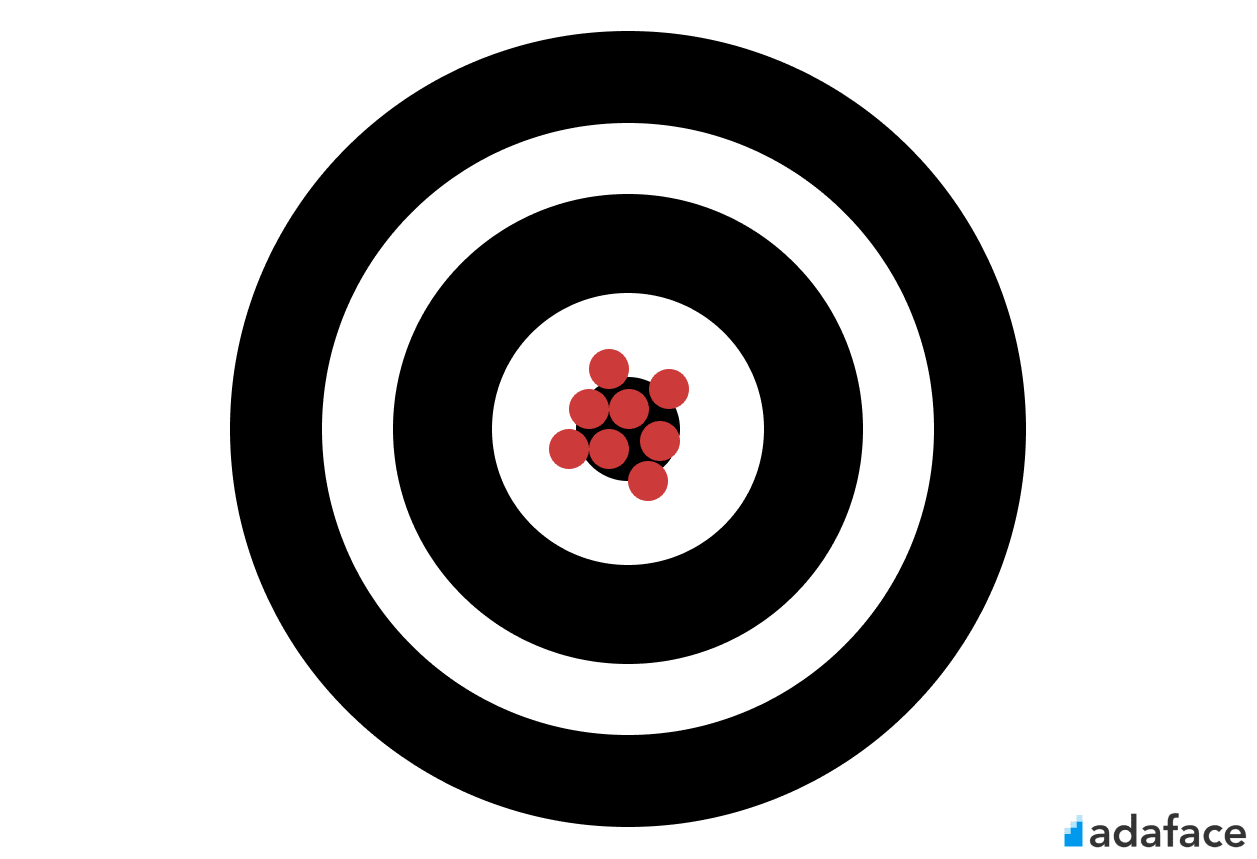

Reliable and Valid:

This is the performance you want to have every game. You consistently strike the target (or close to it). In this scenario, the test scores are consistent, and the scores relate perfectly to the candidate's job. Higher scores imply better job performance, making filtering candidates a walk in the park.

On a Final Note

We here at Adaface focus on tests that are objective, technical tests that test for actual technical skills used on the job and psychometric tests, which research proves to have the highest correlation with job performance.

Do you want to know more about the various tests you can incorporate into your organization to help with your hiring needs? Check out our library of pre-employment assessments.